Stereo Learning Trajectories

Learning-based collision free trajectories from stereo images

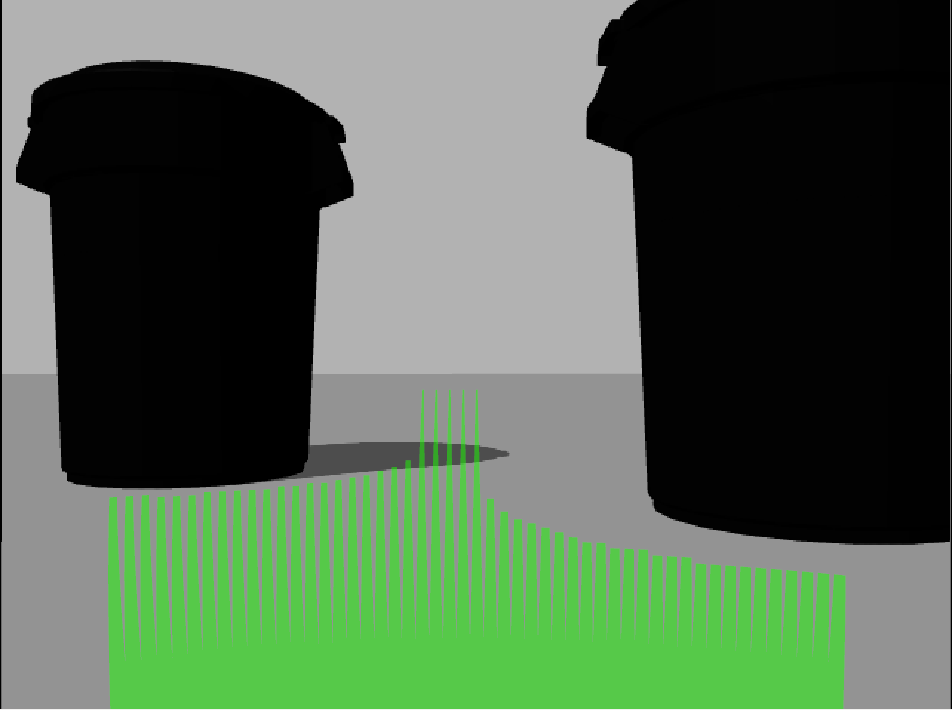

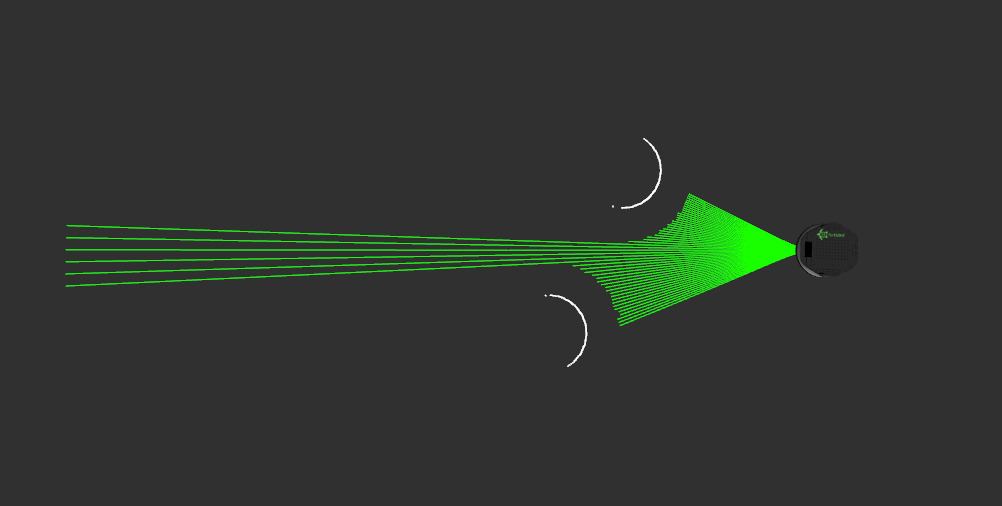

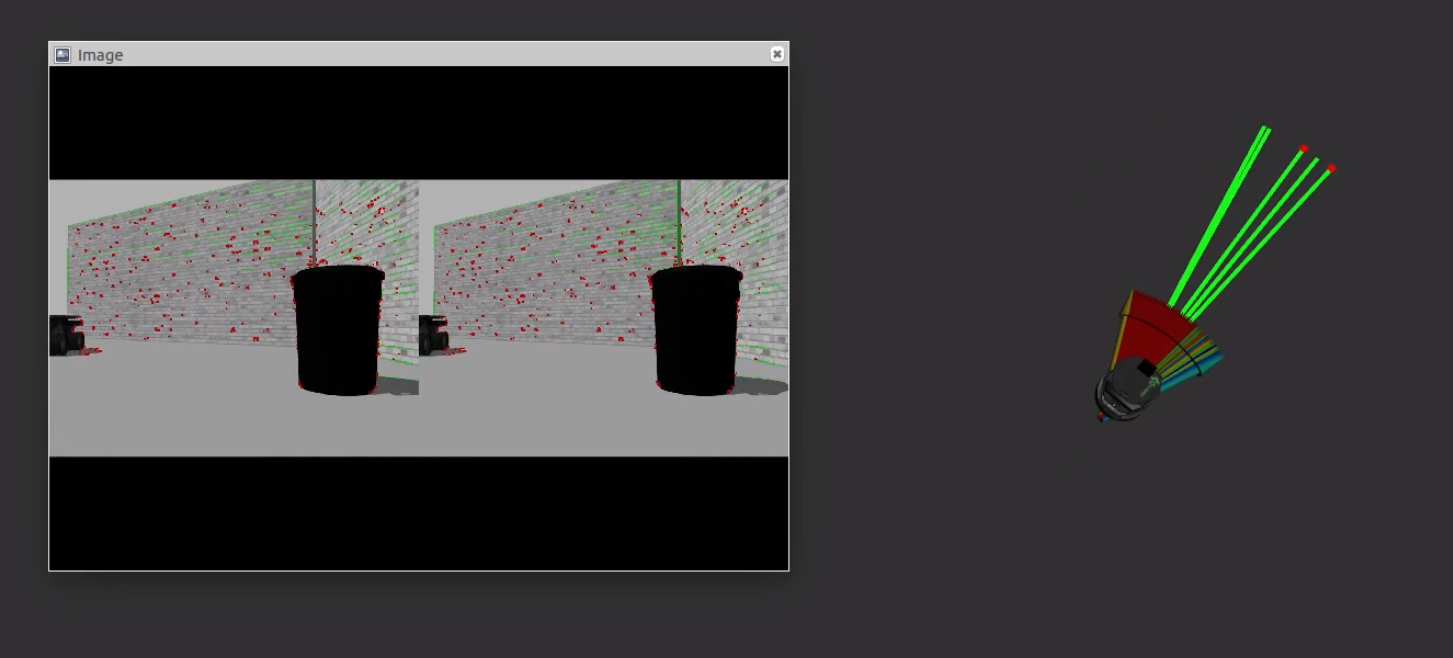

Deep learning helps trajectory selection in local planner with stereo vision system. The motivation is searching for potential collision-free local trajectories without estimating dense depth image from stereo camera. We use Fine-tune AlexNet to classify local trajectory samples with sensor inputs, stereo image pairs and ego laserscan information.

During model training, the training dataset is divided into training set and validation set to tune parameters.

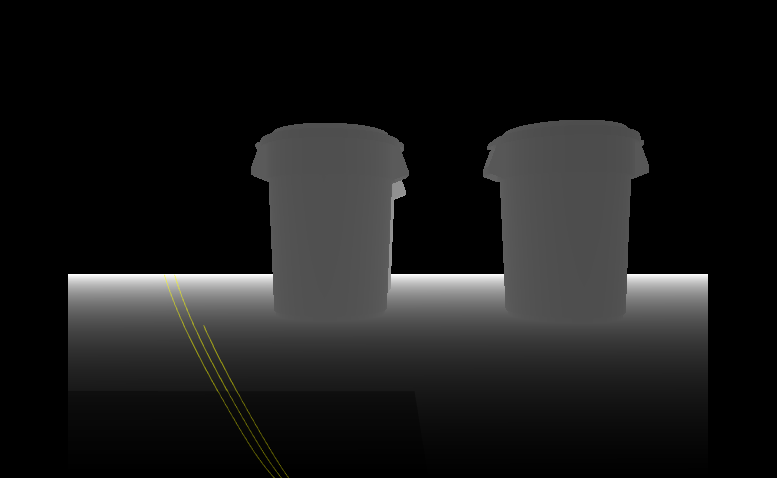

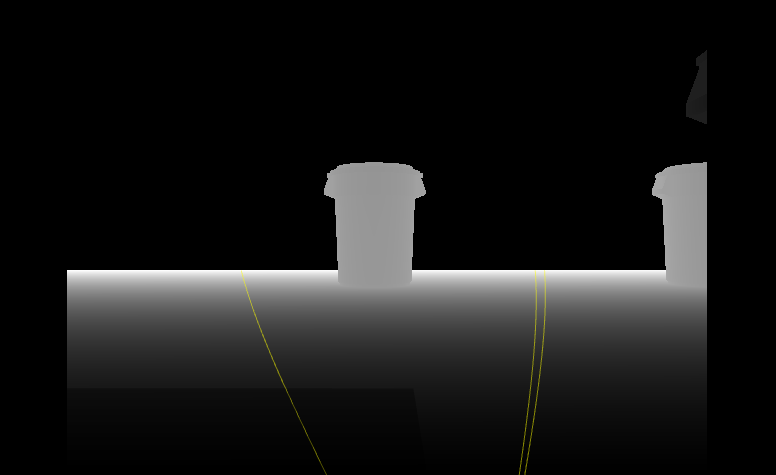

Except for focusing on the testing accuracy, we need to evaluate the approach with our collision checking and navigation strategy. First, the selected trajectories are visualized in the depth image to check the rationality. Then the informed deep learning sampled trajectory will be integrated in the local planner.

In the bottom figure, the colorized departure angles are the output deep network. Different colors represent different confidences. Red means a higher confidence. Top 5 trajectories are generated from the corresponding angles. Then collision checking performs on the selected trajectories.